I started day 3 a bit late. The banquet had run really long, with the main course arriving at 22:00. We made the originally planned Funicolare of 23:15, but that meant I was back at my hotel around midnight only. I did not feel up for an 8:30 session, and the topic of K-12 does not necessarily draw me that much either. So, I skipped paper session #8, and hopped back in at the coffee break before paper session #9.

Paper session #9 - Computing Education Research

This session topic seems a bit strange at first glance, because all papers should fall under this common denominator. So what was in here? I would qualify the three papers in this session as meta-research.

Monica McGill and Joey Reyes - Surfacing Inequities and Their Broader Implications in the CS Education Research Community

You can find the work by Monica and her colleagues here.

Monica started the presentation by showing us some numbers. It seems that enrollment of white students is dropping, while other groups are increasingly finding their way to CS education. However, much of our research work is based on white students' experiences. What does the changing composition of student populations mean for the applicability of our previous findings?

So, the authors of the paper set out to identify what the barriers are for researchers to conduct research, specifically from the perspective of diversity, equity and inclusion. From a meta-analysis of CS ed papers, they created a survey to that 72 researchers filled in. The survey is available here. From both of these sources, they identified four categories of issues:

- Capacity. These barriers of capacity include difficulties finding collaborations, balancing teaching and research, and barriers to certain research methods.

- Access. This can mean access to participants for research, but also access to spaces and activities for people with disabilities, and access to work behind paywalls.

- Participation. This is closely related to access, aiming for full, active participation of all interested to engage in the CSEd community.

- Experience. From knowing where to submit your work, to dealing with rejection.

In short, the privilege of time, knowledge and research leads to easier career progression and increased well-being.

Monica showed us some jokes about barriers in research. On this slide: "Thrilled to announce a paper acceptance! I have accepted this paper will not get written" by Adele Levine

Monica showed us some jokes about barriers in research. On this slide: "Thrilled to announce a paper acceptance! I have accepted this paper will not get written" by Adele Levine

Neil Brown - Launching Registered Report Replications in Computer Science Education Research

You can find the work by Neil and his colleagues here.

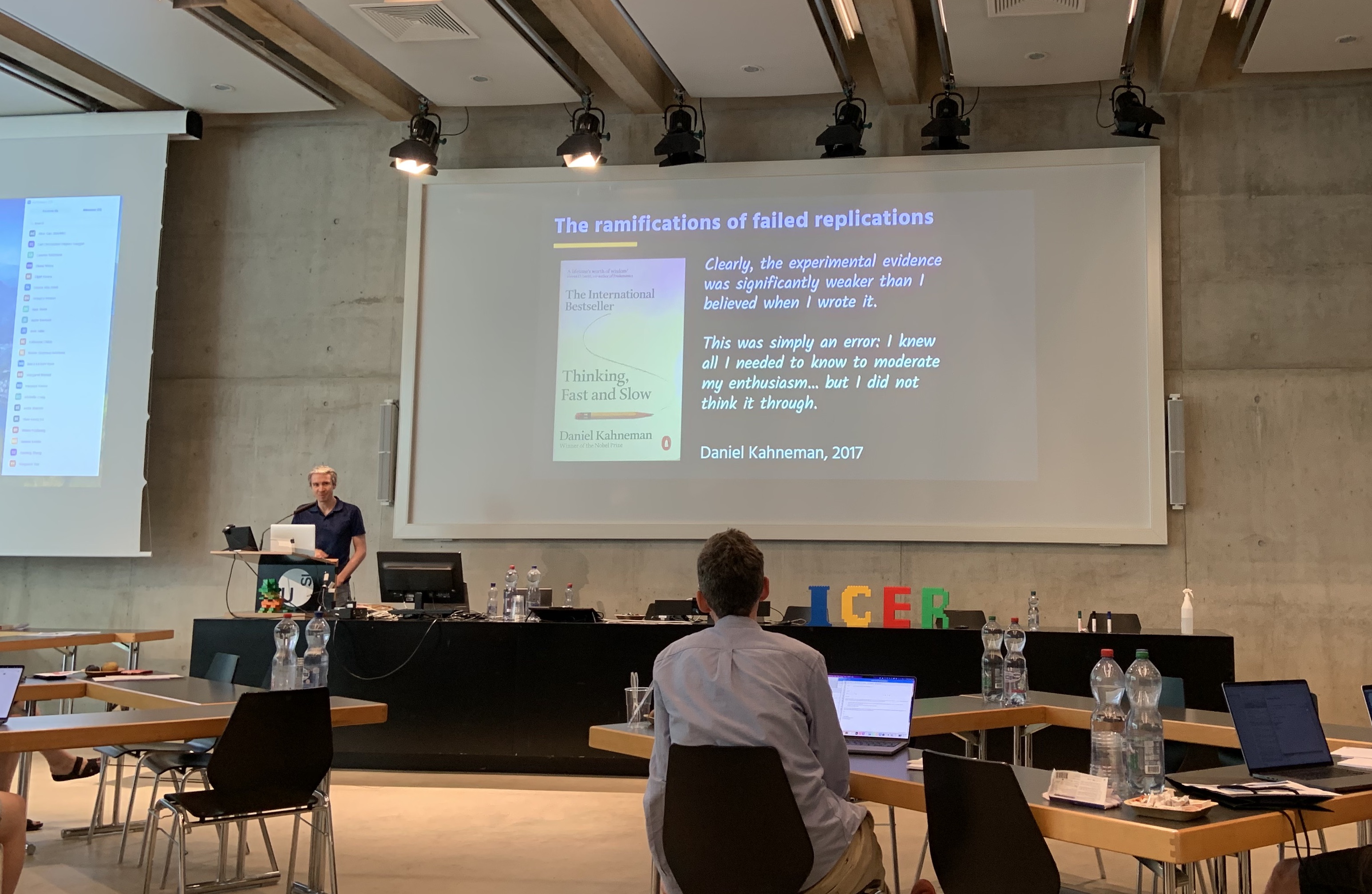

The one thing all researchers want? Reliable research! (who am I kidding, it is an accepted paper first, and citations second, right?) The ongoing replication crisis shows that many scientific studies are difficult or impossible to reproduce. This has been extensively demonstrated in Psychology, but replications have not been in focus for CSEd much yet. However, apparently CSEd researchers think that maybe only 30% of research will replicate.

The core underlying issue is that we must publish. The system pressures us with required numbers for making tenure or getting funding. On top of that we prefer to publish novel things, and only things that are statistically significant. This leads to questionable research practices such as p-hacking, harking or publication bias: if there is no significance, either the author keeps the article in their drawer, or a venue might reject it.

So, how can we get more replication studies done and published, and reduce questionable research practices in the process? There is this approach called registered reports. For a normal paper, you write the whole thing and then send it out for review. For registered reports, you just write the introduction and methods of the paper, before any data gathering and analysis. Then, the idea of the paper can be accepted, without knowing the outcomes.

This approach was then applied by Neil and his colleagues for a special issue of the Computing Education Research journal on replications. If it makes sense for your type of study, they suggest you use it too! For example, you can use the system set up by the Open Science Foundation for this. Neil suggests that sharing your preregistration for your study with your paper submission can show the reviewers that you did not use questionable research processes.

In the discussion after the presentation, Amy immediately opened the floor for pre-registrations for TOCE papers, looking for volunteers to help her set this up. Perhaps the Computing Education Research journal will also add this, but they were not willing to commit on the spot :-)

Neil educates us on the replication crisis and pre-registration.

Neil educates us on the replication crisis and pre-registration.

Benjie Xie and Alannah Oleson - A Decade of Demographics in Computing Education Research: A Critical Review of Trends in Collection, Reporting, and Use

The final paper of this session was presented by Benjie and Alannah, who unfortunately had to move to hybrid ICER as Benjie had tested positive for Covid that morning.

You can find the work by Benjie, Alannah and their colleagues here.

In their paper they examined how CSEd research papers record and use demographic data. What are their populations, how do they record and report this, how is the data used? In the presentation, they focused on what kind of demographics have been reported, and in what language.

For this question, they examined 11 demographic attributes, and in the presentation they focused on gender, race and ethnicity. Some other examples of demographic attibutes from the paper include fluency in the instructional language and socioeconomic status.

For gender, only three in ten papers fully report this information. Some papers partially reported, such as indicating the number/percentage of men. However, this indicates that the authors thought of gender as a binary dichotomy.

Only one in ten papers report on race and ethnicity. Again, they find papers partially reporting, such as those saying 83% of their population was caucasian. Unfortunately, this does not tell us much. Furthermore, two in ten papers use an aggregate term to describe race and ethnicity, such as underrepresented, marginalized, disabilities, etcetera.

For each of the demographic attributes, they also refer to papers that they find did a very good job, and that should be used as exemplars.

The presentation of this group was very short, as they wanted to introduce us to this practice during the session as well. So, we were led to a website where we should analyze a piece of writing about participant characteristics, and then judge it from various angles. This was a very nice addition, to bring into practice what we had just learnt.

Paper session #10 - Responsibility

The final paper session of the conference was on Responsibility, with two papers on very different aspects within this one area!

Noelle Brown - The Shortest Path to Ethics in AI: An Integrated Assignment Where Human Concerns Guide Technical Decisions

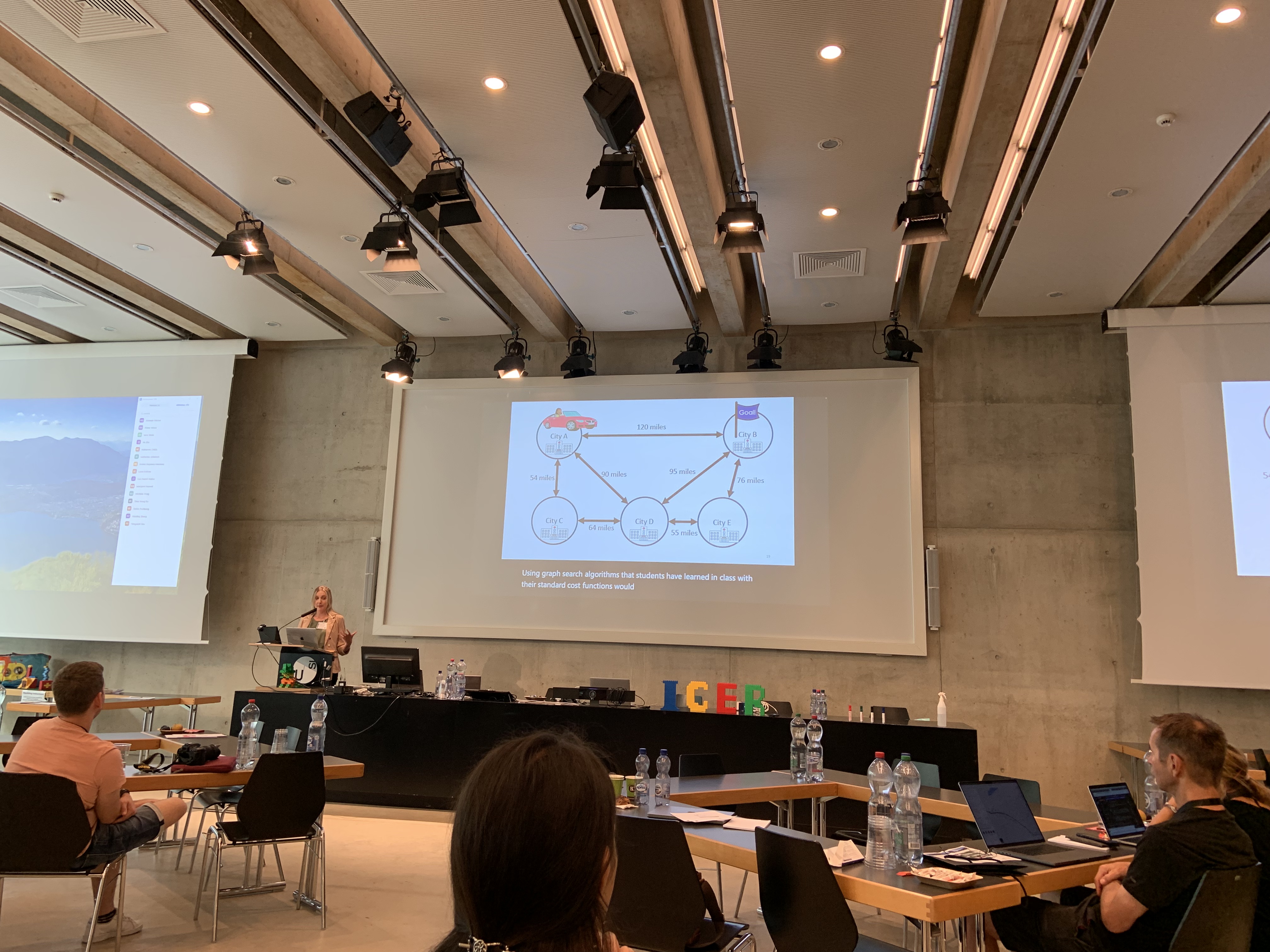

You can find the work by Noelle and her colleagues here.According to Noelle, ethics in technical courses is typically approached through discussing the benefits and harms of technical progress. However, this seems like a very generalized approach. Students should also be aware of the impact of their own contributions. What happens to the code they write, and could they make it more responsible? In short, ethics should be introduced in all steps of the pipeline.

To make this a bit clearer to students (and their teacher) the authors designed and integrated assignment of algorithmic thinking and responsible, human-centered thinking. In the assignment, the students were to write an algorithm that calculated the shortest path that would also take into account access to medical care. To assess whether students were able to apply everything they learnt in the course, a rubric assessed them on three aspects:

- Technical skills. How well does the student define the technical solution? Is this something that contains some algorithmic reasoning? Is it precise?

- Human factors. Do they take the human need at face value, or do they dig more deeply? What might be the human's needs or priorities in any circumstance?

- Integration. Is there alignment between the proposed technical solution and the human factors? A student may have very good solutions to both technical and human needs, but if they are incompatible, the student will still fail.

This last part makes the assignment more than the sum of its parts. You can question whether this assignment really captures ethics (as we did at our table), as there is no introspection or multi-facetedness. However, it does teach the students some nuance over what the 'correct' answer is.

Noelle introduced their integrated assignment.

Noelle introduced their integrated assignment.

Amreeta Chatterjee - Inclusivity Bugs in Online Courseware: A Field Study

The final paper was presented by Amreeta and can be found here.In this study, CS faculty used a tool to identify where their courseware (learning management system, predominantly) had bugs. Some examples of bugs are dead links, inconsistent naming of course aspects, and newcomer unfriendly parts of the system.

I personally had some problems understanding this presentation, as there were quite some assumptions made on what the audience knew. This included the tool, called GenderMag, and the concept of gender-inclusivity bugs. I'm not sure I would call the issues mentioned previously bugs.

In the end, the authors found that the faculty can use the software to find bugs in their LMS. Some were really surprized that bugs remained in their setup, as their courses had been designed around established standards.

Closing, awards and business meeting

Then it was time for the closing session of the conference. Many words of thanks were said, and gifts were shared. Absolutely deserved too, ICER was an amazing experience to me.

This year, the paper awards were given out by a special committee, which means no authors knew of their prizes beforehand. The best paper award went to Juho and his colleagues for their work on generating programming assignments with ML models. The honorable mention went to Maria Kallia and colleagues for their work on rhetorical logic and reasoning in programming. They were both very interesting works, much different from the other papers in the conference. One of the criteria to win is the expectation that this work will be influential in the future of the field, and I fully believe that.

After the closing was done, there was some time for people that had to go to leave and say goodbye. We continued after approximately 10 minutes with the generation of some sort of closing survey. Community suggestions for such questions had been collected from Discord during the conference, and now we went through all to evaluate them. We also removed some questions and added others. The process was relatively smooth, but the setup led to many people in the room talking over eachother, which the virtual participants could not understand. Nonetheless, I think the questionnaire had quite some valuable questions for the new organizers to deal with in their organization next year.

Leisure evening

By the time we were done it was approximately 15:30, so there was lots of time to still do something. Given that restaurants in Ticino often open only by 19:00, there is a need to fill the time too. Although I had brought my swimming gear, like many others, I had not yet tried out the lake. So, we decided that it was time for a swim. Reports had told us that the water was really nice and warm, and we confirmed this ourselves that afternoon :-).

After our swim we had some downtime and chats by the lake, and then we selected a restaurant for dinner. Some non-swimming colleagues also joined us, and in the end we had dinner with 10 people. This was a great closing to an awesome event.

The wednesday dinner group, including some swimmers. Image credit to Megumi Kivuva.

The wednesday dinner group, including some swimmers. Image credit to Megumi Kivuva.

Summary

As you have seen in the reflections of day 1, 2 and 3, I was able to take many useful things away from ICER22. I stuffed my brain full of new research ideas, and I have also made some valuable connections. It was an amazing, interactive event that definitely exceeded my expectations. I really hope to be able to join in Chicago next year. Many thanks to all the organizers: Matthias Hauswirth, Jan Vahrenhold, Kathi Fisler and Diana Franklin, and all supporters: Luca, Andrew, Elisa, Jana and Carolin.